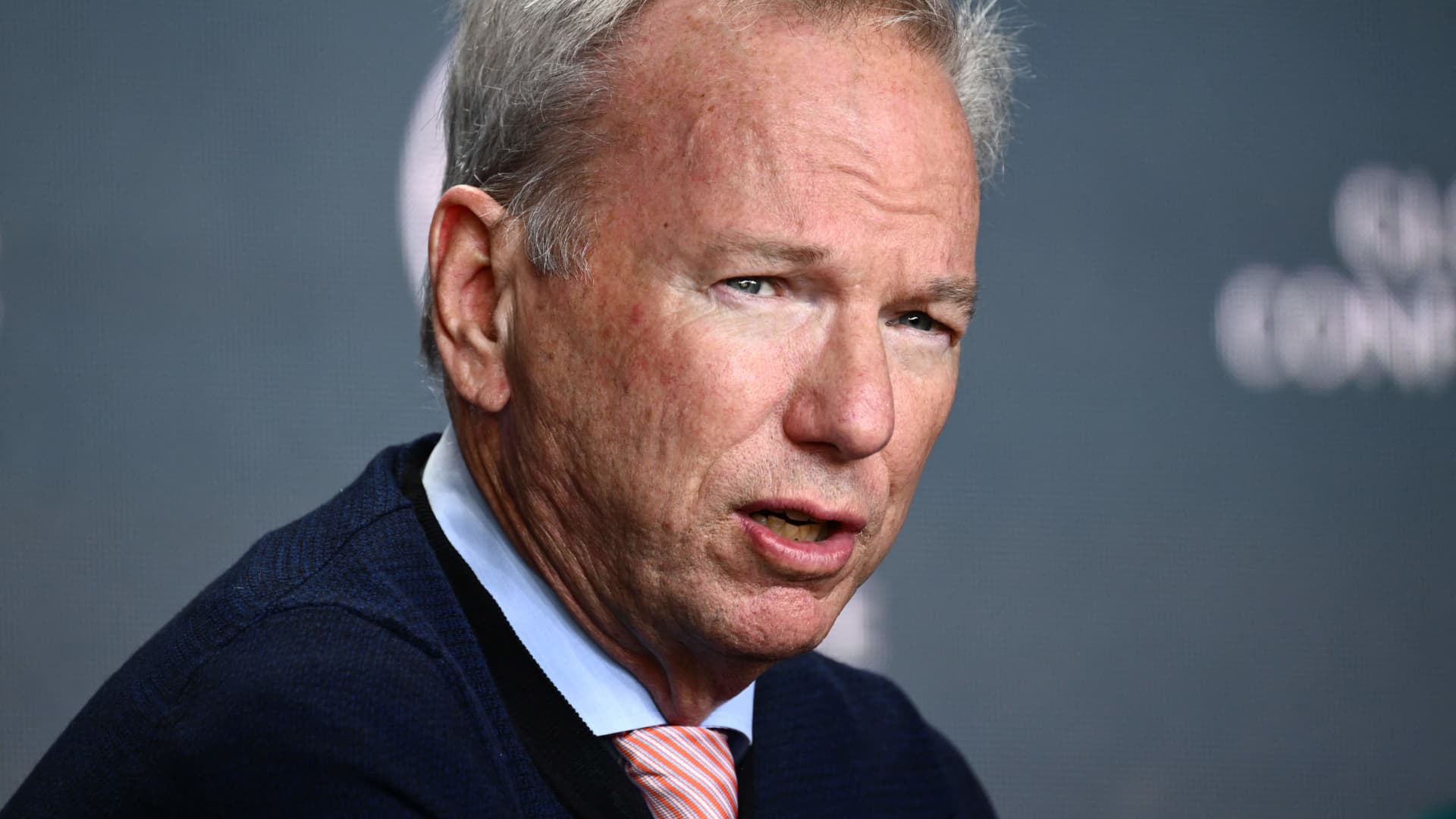

Misinformation in the 2024 election will be rampant due to accessible AI tools, says Eric Schmidt. Social media’s failure to protect against false AI-generated content and the reduction of trust and safety groups are concerns. Schmidt suggests marking content and holding users accountable for law violations.

The volume of humanesque text that can be produced by AI is orders of magnitude greater. It will be diffrent this time and it will be really annoying.

I understand that, but the amount of money that gets fed into political campaigns already generates staggering amounts of spurious text. It’s hard to remember what happened the day before yesterday, but “fake news” originally meant sites that were set up to vaguely look like news sites, all for the purpose of pushing one or two entirely made-up propaganda pieces. Yes, deep learning can partly automate this, but automation isn’t necessary in this case.

There comes a point of diminishing returns with spurious text, and I feel like we’re already past that point.