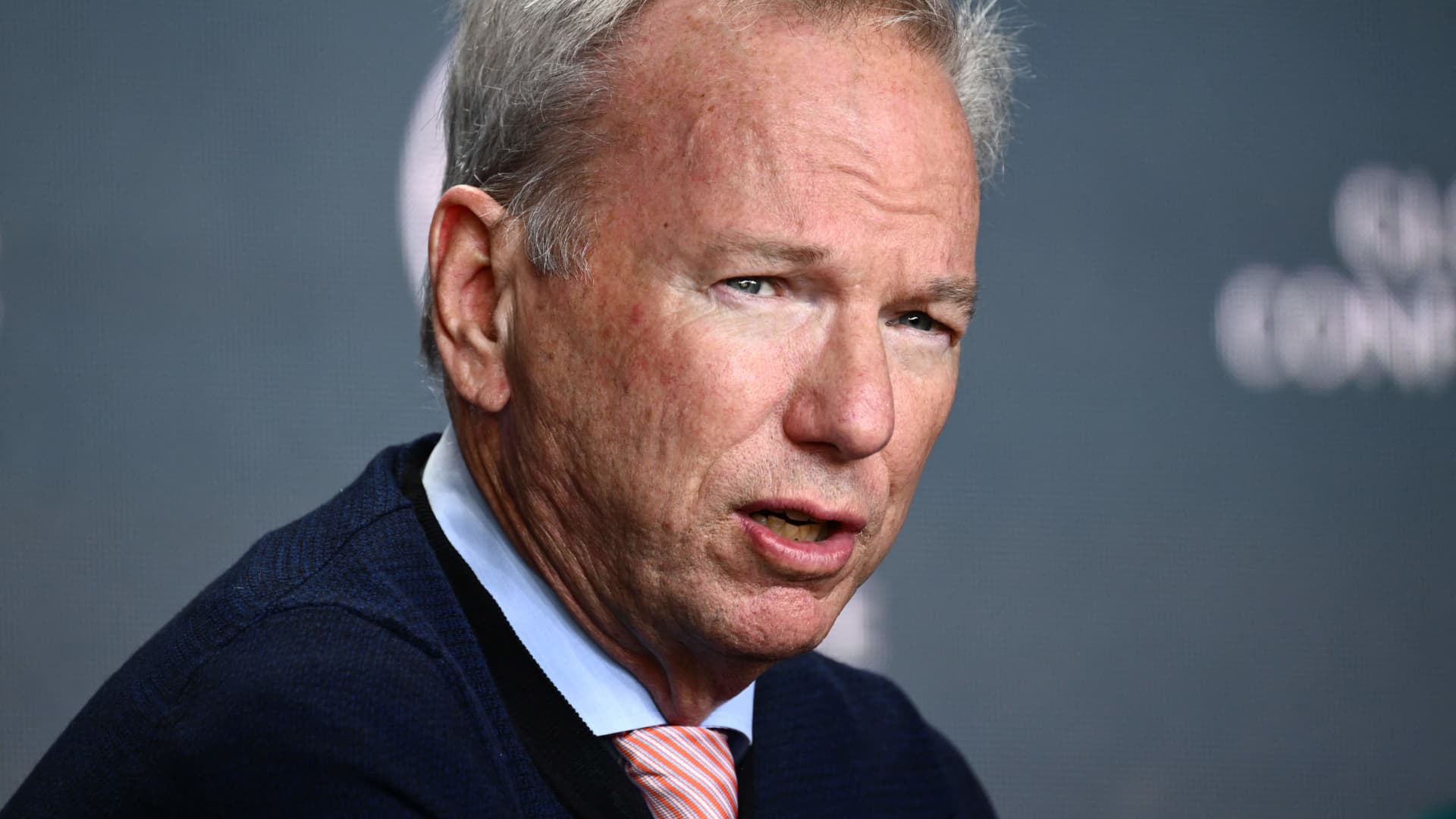

Misinformation in the 2024 election will be rampant due to accessible AI tools, says Eric Schmidt. Social media’s failure to protect against false AI-generated content and the reduction of trust and safety groups are concerns. Schmidt suggests marking content and holding users accountable for law violations.

they haven’t solved it yet

Google hasn’t solved it yet either. You’ve probably all experienced the deluge of AI generated garbage with almost zero pertinent information clogging up search queries. And those aren’t even politically motivated. It’s getting harder and harder to even find actual information from actual humans.

Why is it expected that social media companies will find a solution for this? Political discussions are part of the democratic process so why would any of the big social networks (who are effectively advertising companies) have an incentive to foster the fair and open exchange of ideas and information?

Because we have surrendered so much control of our lives to these companies we can hardly envision an alternative.

The only thing deep learning has done is make forgery more accessible. But Stalin was airbrushing unpersons out of photos sixty years ago, so in principle this is nothing new.

When it comes to politics, there’s already enough money floating around that you don’t need deep learning to clog the internet with shit. So personally I’m not expecting anything different.

I’m expecting the exact same thing as every year, shitty candidates spewing lies and fake promises at each other until the voters get to choose between the two options that corporate America has decided we can vote on.

Can’t believe people still spew both sides in 2023

That is absolutely not what I’m saying. The whole thing is a sham because of corporate interests, lobbyists, super PACs, the electoral college, super delegates, corporate media bias, etc. What I’m saying is that democracy was stolen from us.

Well can’t disagree that it’s creeping damn close to corporatocracy here. Sucks having no actual left wing representation outside of like a handful of congresspeople.

If the DNC had let us have Bernie in 2016 instead of jerking themselves off with their super delegates we would be in a lot better place today. I fully believe that.

The volume of humanesque text that can be produced by AI is orders of magnitude greater. It will be diffrent this time and it will be really annoying.

I understand that, but the amount of money that gets fed into political campaigns already generates staggering amounts of spurious text. It’s hard to remember what happened the day before yesterday, but “fake news” originally meant sites that were set up to vaguely look like news sites, all for the purpose of pushing one or two entirely made-up propaganda pieces. Yes, deep learning can partly automate this, but automation isn’t necessary in this case.

There comes a point of diminishing returns with spurious text, and I feel like we’re already past that point.

Former CEO at Online Manipulation warns us about online manipulation. Hm.