For years I’ve had a dream of building a rack mounted PC capable of splitting its resources to host multiple GPU intensive VMs:

- a few gaming VMs

- a VM for work that can run Davinci Resolve and Blender renders

- an LLM server

- a Stable Diffusion server

- media server

Just to name a few possibilities…

Everytime I’ve looked into it, it seemed like the technology just wasn’t there yet. I remember a few years ago Linus TT took a shot at it, but in the end suggested the technology (for non-commercial entities) just wasn’t in a comfortable spot yet.

So how far off are we? Obviously AI focused companies seem to make it work, but what possibilities exist for us self-hosters who might also want to run multiple displays in addition to the web gui LLM servers? And without forking out crazy money for GPU virtualization software licenses?

The technology has “been there” for a while, it’s trivial do setup what you’re asking for, the issue is that games have anti cheat engines that will get triggered by the virtualization and ban you.

Which games do that? Running pasthrough gpu on windows for destiny and halo at least gave me 0 issues for years

I’m surprised, I was pretty sure anything with Battleye flat out rejected virtualization.

I thought Destiny used Battleye but I must be mistaken on one of these points.

Most likely everything Steam + VAC or Denuvo. There’s a lot of discussion on that topic around the web.

deleted by creator

Anything using vanguard such as valorant and league of legends, battleye such as pubg, destiny 2, and rainbow 6 siege, and easy anti cheat such as fortnight blocks virtual machines. Vanguard is especially bad because it will not allow to run the game with Intel-VT/AMD-V enabled even if you are running bare metal as of its last update.

this just makes me wanna install bare-metal goody-2-shoes windows and cheat using a 5$ arduino

I’ve been doing exactly that at home for a couple years now. First with Parsec, now Sunshine/Moonlight.

Host is Proxmox on Ryzen 5800x, 64gm RAM GPU is 2070 Super, with VGPU patched drivers from https://gitlab.com/polloloco/vgpu-proxmox

When I’m gaming I’ll dedicate the full 8Gb to my windows Vm, otherwise I split it in 2 or 4Gb chunks to Jellyfin or my home camera monitoring. 8gb can’t split very many ways, and most things require at least 2 to run.

Locally at home I can run 1440p 60fps rock solid over wifi on any device, from my phone/old laptop/apple tv/raspberry pi. Remote I can do 1080p60, but a bit more hit or miss depending on my network connection.

Experimenting with LLMs I’ve done through the same windows VM, or to a ubuntu dev VM. Works the same way. I’m thinking of transitioning my gaming VM to Linux too.

The amount of VRAM is the hard limitation to get past, the virtualization tech itself has been there for a while.

But to be perfectly honest……it really was just a “let’s see if I could do this” type task, direct GPU pass though is more straightforward and it’s not really worth splitting 8Gb these days. Unless you get a card with significantly more VRAM passthrough is much less work.

This is really amazing! In theory, can you can use 2gb with 4 different VMs?

Sure, but you’ll get diminishing returns most likely as consumer hardware doesn’t really have the resources to scale that way very well if all the VMs are running demanding apps simultaneously.

Even for something like 4 VMs that just do NVenc, there are limits for how many streams the GPU can do. I think there’s another patch that lets you raise that, but at some point you’ll run out of resources quick. Even powerful consumer gear isn’t really designed to be used by more than one user/app and it starts to show the more you virtualize and split those resources.

How does the vGPU compare to running it on the bare metal? Last I tried things were painful but technically usable.

I don’t see any performance differences with the vgpu actually. I have more performance bottlenecks with the CPU, and my RAM isn’t the fastest, so I think I’m more CPU limited. Benchmarks I have run that are GPU focused seem to show little to no difference from what the physical card would do.

Hmm. I’m running a 3090 and 4090. Looks like vgpu is not possible yet for those cards.

Yeah unfortunately. 20xx is last generation supported so far via the patch, not sure if support for later cards is coming or not.

Have you tried or do you have any knowledge about utilizing the display ports on the gpu while virtualizing either in lieu or in tandem with streaming displays?

No, but I think you’d have some problems. Only the host has access to the actual DisplayPort outputs, all the vgpus have virtual displays, I don’t think there’s a way to make them use the physical out.

I bought a cheap used Dell R710 on Facebook marketplace for like $100 or so, as well as an ups, rack, 10g switch, etc, from various other sellers. All told, I’ve got about $500 in my server setup.

Installed proxmox on it. It’s “free” if you don’t buy a license. You just have to put up with a little nag screen when you open the control panel but it still works 100%, much like winrar.

Works great.

Edit: just realized this is in c/selfhosted AND I misunderstood the post. I’m gonna leave it here just on the off chance it’s useful to somebody, but I acknowledge it’s not what you’re looking for.

Btw just in case you aren’t aware, the nag can be done away with. I don’t have a link off the top of my head but it’s out there.

I run a few servers myself with proxmox. FYI there is a script that removes that nag screen as well as configures some other useful things for proxmox self-hosters.

You’re not really describing your use-case here. Are you just trying to run a server that does all your rendering for you so you can play games elsewhere? Yes, that’s totally possible.

If you’re trying to describe a business…no, it’s not possible, scalable, or profitable.

I’m curious as to what your intentions are here though.

I have a workstation I use for video editing/vfx as well as gaming. Because of my work, I’m fortunate to have the latest high end GPUs and a 160" projector screen. I also have a few TVs in various rooms around the house.

Traditionally, if I want to watch something or play a video game, I have to go to the room with the jellyfin/plex/roku box to watch something and am limited to the work/gaming rig to play games. I can’t run renders and game at the same time. Buying an entire new pc so I can do both is a massive waste of money. If I want to do a test screening of a video I’m working on to see how it displays on various devices, I have to transfer the file around to these devices. This is limiting and inefficient to me.

I want to be able to go to any screen in my house: my living room TV, my large projector in my studio room, my tablet, or even my phone and switch between:

- my workstation display running on a Window 10 VM

- my linux VM with youtube or jellyfin player I use as a daily driver

- a fedora or Windows VM dedicated to gaming, maybe SteamOS

- maybe a friend comes over for a LAN party and we both can game without having to set up a 2nd rig

- I want to host an LLM or stablediffusion server without having to buy a new GPU with enough VRAM to run SDXL

What you’re describing is mostly a networking issue. I’m also pretty suspect about your setup and wishes. You definitely don’t work for a large VFX studio, and you’re not using this as described for CAD work. I’m going to guess this entire setup is for your anime and incest rendering farm.

This is a ridiculous question for anyone with this amount of hardware in their home already that’s using it on a daily basis to actually work. You would also not be “running renders” if this was hardware provided by a company you work for.

Whatever is being asked here is for a shady ass person. Don’t help them.

That’s such a weird leap in logic to jump to. Are you okay?

deleted by creator

I’m not the one making wild accusations about somebody wanting to selfhost a gpu server to edit…incest porn or whatever it is you’re on about.

No idea what lie you think I’m telling. 🤷♂️

… what?

Them: “I want a centralized place to handle all my graphics stuff, so I can access graphically intensive things from any device.”

You: “Must be incest renders because you already have hardware and say you use it for work.”

So according to you, contractors don’t exist, iPhones can play PC games, and anyone wanting to split PC resources between multiple use cases is shady.

What’s ridiculous is that you seem to think extreme paranoia is a normal thing in everyday life.

Wow. Where is all this hate coming from?

People like to experiment, and tinker, and try things in their home lab, that would scale up in a business. Just to prove they can do it. That’s innovation. We should celebrate it. Not quash people

I know you asked about VMs, but fwiw there are GPU-capable containers now: https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html

Used one of these and the setup is as easy as it sounds. It can run Houdini, Stable Diffusion.

I’ve also wanted to do this for a while, but there were always a few too many barriers to actually spin up the project. Here’s just a brain dump of things I’ve seen recently.

vGPUs continue to be behind a license. But there is now vgpu_unlock.

L1T just showed off PCIe “fabric” from Liqid that can switch physical devices between machines.

Turning VMs on and off isn’t as slick as either of the above, but that is doable today. You’ll just have to build all the switching automation yourself. That could just be a shell script running QEMU/libvirt commands, at a minimum.

Why are vGPUs behind a license? They work fine on Linux as they are part of KVM and Virtio.

Why? Product segmentation I suppose. Last I looked, the Virtio project’s efforts were still work-in-progress. The Arch wiki article corroborates that today. Inconsistent behavior across brands and product lines.

What are you talking about? I though we were talking about Proxmox

The OP didn’t mention Proxmox in their post. I’ve been speaking generally, not about any specific OS. For example, Nvidia’s enterprise offerings include a license to use their “GRID” vGPU tech (and the enabled feature flag in the driver).

Thank you

Here is an alternative Piped link(s):

L1T just showed off PCIe “fabric” from Liqid that can switch physical devices between machines.

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

Everytime I’ve looked into it, it seemed like the technology just wasn’t there yet. I remember a few years ago Linus TT took a shot at it, but in the end suggested the technology (for non-commercial entities) just wasn’t in a comfortable spot yet.

I had a sever in my basement running proxmox ( actually ended up doing it all manually eventually ), with a windows gaming VM and handful of utility Linux servers in 2015? The only problem being Windows games using kernel level anti cheat.

I get it really comes down to GPU sharing and I think it’s doable on consumer GPUs now but I’m not sure about gaming. Honestly the tech has been here for a long time. But companies like NVIDIA held on forever to the GPU resource sharing features and kept it away from consumer cards.

I’m a bit older these days and have gone through many generations of hardware with a different setup. I keep two or more GFX cards on hand. Latest always goes to my workstation while last gen is thrown in my sever and used by all my docker containers. Then have an older Xeon with 24 bays that I use for storage.

OK, but why?

Well, for fun and as a cool hobby project, I get that. That is enough to justify it, like any other crazy hobbyist project. Don’t let me stop you.

But in the spirit of practicality and speaking hypothetically: Why set it up that way?

For self-hosting why not build a few standalone machines and run off that instead? The reason to do this large scale is optimizing resources so you can assign a smaller pool of hardware to users as they need it, right? For a home set of two or three users you’d probably notice the fluctuations in performance caused by sharing the resources on the gaming VMs and it would cost you the same or more than building a couple reasonable gaming systems and a home server/NAS for the rest. Way less, I bet, if you’re smart about upgrades and hand-me-downs.

Yep this has been my hold up. It is mostly just a solution in search of a problem.

The best use case I have come up with is if you have an nice computer and an extra GPU laying around. You could turn the single computer into two workstation/gaming computers.

Yeah, but if you’re this deep into the self hosting rabbit hole what circumstances lead to having an extra GPU laying around without an extra everything else, even if it’s relartively underpowered? You’ll probably be able to upgrade it later by recycling whatever is in your nice PC next time you upgrade something.

At this point most of my household is running some frankenstein of phased out parts just to justify my main build. It’s a bit of a problem, actually.

Mainly because running multiple desktop machines adds up to a lot of power, even at idle. If you power them off and on as needed it’s better, but then it’s not as convenient. Of course, if you leave a single machine with multiple GPUs on 24/7 that will also eat a lot of power, but it will be less than multiple machines turned on 24/7 at least.

And the physical space taken up by multiple desktop machines starts to add up significantly, particularly if you live in an apartment or smaller house.

I guess that depends on the use case and how frequently both machines are running simultaneously. Like I said, that reasoning makes a lot of sense if you have a bunch of users coming and going, but the OP is saying it’s two instances at most, so… I don’t know if the math makes virtualization more efficient. It’d pobably be more efficient by the dollar, if the server is constantly rendering something in the background and you’re only sapping whatever performance you need to run games when you’re playing.

But the physical space thing is debatable, I think. This sounds like a chonker of a setup either way, and nothing is keeping you from stacking or rack-mounting two PCs, either. Plus if that’s the concern you can go with very space-efficient alternatives, including gaming laptops. I’ve done that before for that reason.

I suppose it’s why PC building as a hobbyist is fun, there are a lot of balance points and you can tweak a lot of knobs to balance many different things between power/price/performance/power consumption/whatever else.

I have upgraded my GPU on my desktop without upgrading anything else. Leaving me with a spare GPU and no other hardware.

Self hosting, I have also pulled GPUs out of systems to keep the power requirements down. As most of the time onboard GPUs are just fine for Self hosting applications. Also leaving me with a spare GPU.

However over the years GPUs have because more popular for processing there are more arguments to keep the GPU in a home server. So I can see how this is going away.

Maybe my situation is just unique, but due to my job I’m able to have a single workstation with multiple high VRAM GPUs. I wouldn’t be able to justify the cost of buying new GPUs and an entire rig just for gaming or AI image/video. I wouldn’t foresee more than 2 VMs using the GPU in high priority at any single time.

When I’m not working this system sits idle or is running renders. Why not utilize the amazing resources I have to serve my other needs?

OK, yeah, that makes sense. And it IS pretty unique, to have a multi-GPU system available at home but just idling when not at work. I think I’d still try to build a standalone second machine for that second user, though. You can then focus on making the big boy accessible from wherever you want to use it for gaming, which seems like a much more manageable, much less finicky challenge. That second computer would probably end up being relatively inexpensive to match the average use case for half of the big server thing. Definitely much less of a hassle. I’ve even had a gaming laptop serve that kind of purpose just because I needed a portable workstation with a GPU anyway, so it could double as a desktop replacement for gaming with someone else at home, but of course that depends on your needs.

And in that scenario you could also just run all that LLM/SD stuff in the background and make it accessible across your network, I think that’s pretty trivial whether it’s inside a VM or running directly on the same environment as everything else as a background process. Trivial compared to a fully virtualized gaming computer sharing a pool of GPUs, anyway.

Feel free to tell us where you land, it certainly seems like a fun, quirky setup etiher way.

GPU passthrough has been pretty good for a while. The reason why Linus couldn’t get it working reliably was because iirc, he tried to do it on windows… I’ve done it before with a single gpu and have very recently set it up again, now that I have a 2nd one and gotta say, it’s pretty damn good…

How are you handling displays and keyboard/mouse? Also what VM software?

It goes over all of the steps of setting it up.

Here is an alternative Piped link(s):

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

I’ve recently tried to do that using sunsine and different linux gaming distros and it was awful, the VM was working great for a few minutes and then suddenly crashes and I have to hard stop it.

All the people that I’ve seen talking about it on the internet are using Windows VMs so I guess that I’m doing something wrong or the only way to do it is through a Windows VM, which I’ll not even try.

I run a gaming Linux VM on my server and it works fine.

Could you explain how? I’m pretty lost in this situation…

Hey, sorry I didn’t reply until now but life has been pretty hectic and I also kinda borked my streaming VM right at the same time as I wrote that. I ran Nobara Linux for a while with KDE on Xorg and it actually worked pretty well. Then I decided I wanted to give Bazzite a try but I didn’t like the whole immutable thing. I went back to Nobara just to find that Steam Remote Play straight up didn’t work and I couldn’t know if I had failed to set up something properly or Valve just broke it while I was “away”. A couple of days ago I decided to just abandon Remote Play for the time being and deployed Games on Whales and it seems very promising so far. Much easier than fiddling with VM:s and GPU passthrough and Sunshine/Moonlight has never failed me.

No worries, LOL we followed exactly the same steps with the same problems, in fact, I was procrastinating documenting my problems in my Logseq and I think I’ll copy your explanation because it’s exactly my case in everything xd thanks ^^

I’ve recently tried to do that using sunsine and different linux gaming distros and it was awful, the VM was working great for a few minutes and then suddenly crashes and I have to hard stop it.

Are you running this with something like libvirtd/qemu? If so, VFIO configurations can get pretty complex. Random crashes seem like MSI interrupt issues (or you’ve allocated too much RAM to the guest). Or it could be GPU reset issues that would also occur on the (Linux) host, a newer kernel and Mesa version in the guest may help.

Setting on the kernel commandline for the host to workaround MSR interrupt crashes:

kvm.ignore_msrs=1

If you’re running on a Windows host or with something like Virtualbox (assuming GPU passthrough is supported by these), YMMV but I wouldn’t expect good results.

I’m using Proxmox with an NVIDIA 1050 GPU that I was passing through to another VM for jellyfin transcoding in docker (I don’t need it anymore), because of that I thought that the drivers were set up correctly.

The guest was Bazzite with 2 cores and 2 GB of RAM, I was not even gaming, just login on steam and updating the system and I had sudden crashes with Bazzite only using 1 GB on the Summary…

Ah Nvidia. Bazzite uses Wayland I believe since it uses the same gamescope session as SteamOS (unless something has changed recently). While it may be possible to get it working, I’d expect a much better time with an AMD card.

A traditional distribution may be a better bet with Nvidia for now.

Thanks, I’ve also tried to change the autologin with x11 with no success, I’ll try with nobara, but I really liked the console-like features

You can use proxmox to do most of this. Currently my set will only pass-through the gpu to one VM. I have heard of splitting the power among VMs but I have not gone down that rabbit hole. If I want to play with llms I fire up that server, if I want to game, I shut that down and fire up my windows 10 vm.

In Proxmox they have VirGL-GPU and Virtio-GPU. They allow VMs to pass work to the GPU without being dedicated to one VM. I don’t think gaming was the intended use case and don’t know what kind of performance you would get. My uninformed guess is that it would not be great.

I’ve always found the documentation around virtio-GPU and virtgl very lacking, and have never gotten them working. Would love to get pointers if anyone has a good source.

Craft computing has been chasing this for several years now. His most recent attempt being the most successful one. https://m.youtube.com/watch?v=RvpAF77G8_8

Here is an alternative Piped link(s):

https://m.piped.video/watch?v=RvpAF77G8_8

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

I got stardew working on a local network and playing on the miyoo mini. It was cool for the novelty, but had terrible performance outside a local network. After only a couple of hops it’s unplayable and will disconnect.

You should take a look at https://www.youtube.com/channel/UCp3yVOm6A55nx65STpm3tXQ he does have a serie about doing something like this and go in depth in it

you beat me to it. this is the way.

Here is an alternative Piped link(s):

https://www.piped.video/channel/UCp3yVOm6A55nx65STpm3tXQ

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

As others have expressed- were already there. Understand though that the reason this hasn’t caught on mainstream is the entire purpose of what you are asking is simple: it runs counter to the standards of commercial capitalism. We are talking about efficiency, self hosting, doing more with less, and cutting strings.

That said- understand that what you are undertaking is not dissimilar from building infrastructure in a company. You are building and expanding to meet your needs. Your needs are unique so there isn’t a ‘turn key’ solution that will fit perfectly… so you need to try things and see what works.

As far as things you are talking about specifically: you are going to ultimately be dipping your toes into the virtualization world… so xcp-ng and proxmox are good choices. If you can get your hands on older copies and uh… source a key or two: esxi is also very beginner friendly but won’t be able to upgrade thanks to their new pricing model. You seem like you are aware of the YouTube sphere so let me recommend 2GuysTech and the series on different hypervisors.

Once you decide on a hypervisor it’s as ‘simple’ as building a PC to meet your needs. If you have one already I’d start there to get a feel for how much you can pull out of it to determine how much you may need. You can probably split up a single GPU or just pass it through (cost vs performance.). LLMs are power / resource hungry so that may require it’s own GPU.

If power is cheap by you you can look into older server hardware but honestly this can be a messy space to dabble in (noise, heat, power costs.)

From there play with services that fit your needs.

It’s very doable and there are some easier paths to take… certainly- but again the thing about homelabs is it’s very custom. This is why the community (in general) is willing to help. We all have had to forge the same path.

None of the presented solutions cover the aspect of being in a different place than the rack, the same network is fine, but at a minimum a different room.

How do you deliver high resolution (e.g. 1440p, 144 fps) to multiple monitors with low latency over a network? I haven’t seen anything like that accomplished without running fiber from the host.

Eventually, your thin client will need too much power anyway, making the costs rise a lot. It makes sense in an office where you have 500 seats and you can load balance resources.

If someone can show me a multi seat gaming server that has native remote performance (as in you drag windows around in 144 fps, not the standard artifacty high latency behavior of vnc) I’ll eat a shoe.

Yep just ping time and latency make this a no go for a vast majority of us.

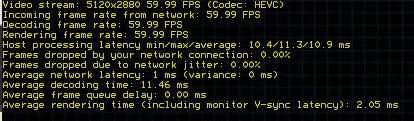

Can you define what acceptable latency would be?

local network ping (like corporate networks) 1-2ms

Encoding and decoding delay 10-15ms

So about ~20ms of latency

Real world example

None of the presented solutions cover the aspect of being in a different place than the rack, the same network is fine, but at a minimum a different room.

If someone can show me a multi seat gaming server that has native remote performance (as in you drag windows around in 144 fps, not the standard artifacty high latency behavior of vnc) I’ll eat a shoe.

Thin clients absolutely can do this already. There are a variety of ways to transmit low latency video around a home from HDBaseT solutions to multicast / network driven ones. Nevermind basic solutions like sunshine /moonlight… Nvidia variants etc.

I have a single racked PC for feeding my home which has 3 ‘desk’ endpoints and two tvs… all of which are fed from the same location and can be dynamically matrixed (albeit the choke point is usb2 to each location because I’m cheap.). Latency is maybe 1.5-3 frames from live. Other solutions are normally around 5-8 which while higher are sufficiently snappy and won’t effect competitive play (professional level notwithstanding.)

A lot of latency comes down to tuning your solution and research. The vnc method you refer to is the lowest common denominator running on ancient technology and codecs simply because it is a widely supported standard.

Edit: As far as 144 goes- I don’t have any displays that run that but I have two running at 120 with no issue.

What is the cost of the thin clients and are you doing this over copper?

Are your desks multi monitor? To get the bare minimum in my households scenario I would need at least 12 streams at greater than 1080p

For 5 seats how much did it cost versus just having a computer in each location? For example looking at hdbaset to replace just my desk setup, I would need 4 ~$350 devices, just looking at monoprice for an idea (https://www.monoprice.com/product?p_id=21669) which doesn’t even cover all of the screens in my office.

The two workstation nooks (spaces) have the capability to have a second monitor but I’ve since retired them in favor of ultrawide monitors which I find are a better experience in general. My current working solution is a split between two technologies: one thin client (second monitors) and one network distribution solution using multicast (primary displays and USB). Both run on copper 1 gig but the multicast traffic requires a switch that doesn’t suck and vlan usage. On average a single port can reach 70-85% usage sustained. I believe my longest run is 150’ ish.

Cost per node is roughly 300- so comparable to what you are experiencing. If I went stupid cheap I could probably cut that to maybe 150-250 depending on my luck with eBay and patience.

In terms of capabilities you could argue that this could be done without distribution using a nuc solution… but you’d have to split resources to reach node you’d need a full feature set at.

My central server is a threadripper build with 2 gpus for direct passthrough to ‘gaming’ vms and a split gpu handling the rest of the needs of the other systems. Thanks to the matrix capabilities any given seat can be any system… or in some cases 2 seats can be a single rig (2 room gaming off the same display). There is a cost savings to be found in splitting resources from a more expensive build out to cheaper nodes… but ymmv depending on active seats and specific needs. I believe as a general rule it should be less costly and more efficient (power/heat) than individual solutions.

Thanks for the breakdown! This is probably the most helpful breakdown I’ve seen of a build like this.

Absolutely 👍. I’ll just add that there are a lot of alternate routes to get the result you want so research and experiment but ideally set a deadline which can help with decision paralysis. Later changes are a problem for future you 😁.

Fiber isn’t some exotic never seen technology, its everywhere nowadays.

Moonlight literally does what you want, today! using hvec encoding straight in the gpu.

Try it out on your own network now.

A display port to fiber extender is $2,000. The fiber is not for the network.

Moonlight does not do what I want, moonlight requires a GPU on the thin client to decode. You would need a high end GPU to decide multiple high resolution video streams. Also afaik, moonlight doesn’t support multiple displays.

Fair enough. If you know it doesn’t work for your use case that’s fine.

As demonstrated elsewhere in this discussion, GPU HEVC encoding only requires 10ms of extra latency, then it can transit over fiber optic networking at very low latency.

Many GPUs have HEVC decoders on board., including cell phones. Most newer Intel and AMD CPUs actually have an HEVC decoder pipeline as well.

I don’t think anybody’s saying a self-hosted GPU VM is for everybody, but it does make sense for a lot of use cases. And that’s where I think our schism is coming from.

As far as the $2,000 transducer to fiber… it’s doing the same exact thing, just more specialized equipment maybe a little bit lower latency.

100% ^^^ This.

You could do everything with openstack, and it would be a great learning experience, but expect to dedicate about 30% of your life to running and managing openstack. When it just works, it’s great… when it doesn’t… ohh boy, its like a CRPG which will unlock your hardware after you finish the adventure.

Can this solution deliver 3+ streams of high resolution (1440p or higher and 144fps) low latency video with no artifacting and near native performance and responsiveness?

Gaming has a high requirement for high fidelity and low latency I/O, no one wants to spend all this money on racks and thin clients, the then get laggy windows and scrolling, artifacts, video compression, and low resolution.

That’s the problem at hand with a gaming server, if you want to replace a gaming desktop with a vm in a rack, you need to actually get the I/O to the user somehow, either through dedicated cables from the rack, fiber, or networking, the first is impractical, it involves potentially 100ft long runs of multiple display port, HDMI, USB, etc, and is very rigid in its application, the second is very expensive, shooting the price up to thousands of dollars per seat for display port/USB over fiber extenders, and the third option I have yet to see a vnc/remote solution that can deliver near native video performance.

I should reiterate, the op wants to do fidelity sensitive tasks, like video editing, they don’t just need to work on a spreadsheet.

Yes, for some definition of ‘low latency’.

Geforce now, shadow.tech, luna, all demonstrate this is done at scale every day.

Do your own VM hosting in your own datacenter and you can knock off 10-30ms of latency.

However you define low latency there is a way to iteratively approach it with different costs. As technology marches on, more and more use cases are going to be ‘good enough’ for virtualization.

Quite frankly, if you have a all optical network being 1m away or 30km away doesn’t matter.

Just so we are clear, local isn’t always the clear winner, there are limits on how much power, cooling, noise, storage, and size that people find acceptable for their work environment. So there is some tradeoff function every application takes into account of all local vs distributed.

Right, but who has the resources to rent compute with multiple GPUs, this is a gaming setup, not office work, and the op was talking about racking it.

All of those services offer an inferior experience to being at the hardware, it’s just not the same experience. Seriously, try it with multiple 1440p 144hz displays, it just doesn’t happen work out well, you are getting a compromised product for a higher cost. You need a good GPU (or at least a way to decode multiple hvec streams) in in the client, and so, you can run a standard thin client.

‘low latency’ is a near native experience, I’m talking, you sit down at your desk and it feels like you are at your computer(as to say, multiple monitors, hdr, USB swapping, Bluetooth, audio, etc, all working seamlessly without noticeably diminished quality), anything less isn’t worth it, since you can just, use your computer like normal.

Remember the original poster here, was talking about running their own self-hosted GPU VM. So they’re not paying anybody else for the privilege of using their hardware

I personally stream with moonlight on my own network. Have no issues it’s just like being on the computer from my perspective.

If it doesn’t work for you Fair enough, but it can work for other people, and I think the original posters idea makes sense. They should absolutely run a GPU VM cluster, and have fun with it and it would be totally usable

Yea I do, you brought up that local isn’t always the option.

I desperately want it to work for me, i just can’t get it to work without spending thousands of dollars on hardware just to get back to the same experience as having a regular desktop at my desk.

Okay. Do you want to debug your situation?

What’s the operating system of the host? What’s the hardware in the host?

What’s the operating system in the client? What’s the hardware in the client?

What does the network look like between the two? Including every piece of cable, and switch?

Do you get sufficient experience if you’re just streaming a single monitor instead of multiple monitors?

This. Exactly. Many solutions exist but need to be selected based on scale and personal needs.