Yeah, you make really good points, but we get also get the kind of bad faith actor that browses all and downvote community content whenever they see it.

Yeah, you make really good points, but we get also get the kind of bad faith actor that browses all and downvote community content whenever they see it.

You don’t have to like anything, but consistent downvoting like this without any other kind of participation is indistinguishable from targeted downvote harassment, and isn’t consistent with your claims of blocking.

I’m a moderator on [email protected], where you’ve never upvoted once, but you have downvoted multiple posts over months instead of blocking the community. This down-ranks submissions, adversely affecting the visibility of the community for subscribers, which is why you were banned.

https://lemmy.dbzer0.com/post/28218497

https://lemmy.dbzer0.com/post/35031819

This doesn’t mean you can misrepresent facts like this though. The line I quoted is misinformation, and you don’t know what you’re talking about. I’m not trying to sound so aggressive, but it’s the only way I can phrase it.

Generating an AI voice to speak the lines increases that energy cost exponentially.

TTS models are tiny in comparison to LLMs. How does this track? The biggest I could find was Orpheus-TTS that comes in 3B/1B/400M/150M parameter sizes. And they are not using a 600 billion parameter LLM to generate the text for Vader’s responses, that is likely way too big. After generating the text, speech isn’t even a drop in the bucket.

You need to include parameter counts in your calculations. A lot of these assumptions are so wrong it borders on misinformation.

It helps to think of fights against monsters as a turn-based encounter. As long as you can dodge or the monster misses its attack, you should be able to land a hit. If you get hit or are too far away when the monster attacks, you probably won’t be able to land any meaningful offense or heal without getting punished for it.

I’m not discussing the use of private data, nor was I ever. You’re presenting a false Dichotomy and trying to drag me into a completely unrelated discussion.

As for your other point. The difference between this and licensing for music samples is that the threshold for abuse is much, much lower. We’re not talking about hindering just expressive entertainment works. Research, reviews, reverse engineering, and even indexing information would be up in the air. This article by Tori Noble a Staff Attorney at the Electronic Frontier Foundation should explain it better than I can.

Private conversations are something entirely different from publically available data, and not really what we’re discussing here. Compensation for essentially making observations will inevitably lead to abuse of the system and deliver AI into the hands of the stupidly rich, something the world doesn’t need.

I mean realistically, we don’t have any proper rules in place. The AI companies for example just pirate everything from Anna’s Archive. And they’re rich enough to afford enough lawyers to get away with that. And that’s unlike libraries, which pay for books and DVDs in their shelves… So that’s definitely illegal by any standard.

You can make temporary copies of copyrighted materials for fair use applications. I seriously hope there isn’t a state out there that is going to pass laws that gut the core freedoms of art, research, and basic functionality of the internet and computers. If you ban temporary copies like cache, you ban the entire web and likely computers generally, but you never know these days.

Know your rights and don’t be so quick to bandwagon. Consider the motives behind what is being said, especially when it’s two entities like these battling it out.

You have to remember, AI training isn’t only for mega-corporations. By setting up barriers that only benefit the ultra-wealthy, you’re handing corporations a monopoly of a public technology by making it prohibitively expensive to for regular people to keep up. These companies already own huge datasets and have whatever money they need to buy more. And that’s before they bind users to predatory ToS allowing them exclusive access to user data, effectively selling our own data back to us. What some people want would mean the end of open access to competitive, corporate-independent tools and would leave us all worse off and with fewer rights than where we started.

The same people who abuse DMCA takedown requests for their chilling effects on fair use content now need your help to do the same thing to open source AI. Their next greatest foe after libraries, students, researchers, and the public domain. Don’t help them do it.

I recommend reading this article by Cory Doctorow, and this open letter by Katherine Klosek, the director of information policy and federal relations at the Association of Research Libraries. I’d like to hear your thoughts.

You’re moving the goalposts. Your original reply made no mention of co-authorship by a human, it was just one sweeping statement.

AI art is not protected by copyright, yes. That isn’t a “should” but rather how it actually works in nearly all countries but a few, certainly including the US.

But they do, explicitly:

Many popular AI platforms offer tools that encourage users to select, edit, and adapt AI- generated content in an iterative fashion. Midjourney, for instance, offers what it calls “Vary Region and Remix Prompting,” which allow users to select and regenerate regions of an image with a modified prompt. In the “Getting Started” section of its website, Midjourney provides the following images to demonstrate how these tools work.136

Unlike prompts alone, these tools can enable the user to control the selection and placement of individual creative elements. Whether such modifications rise to the minimum standard of originality required under Feist will depend on a case-by-case determination.138 In those cases where they do, the output should be copyrightable. Similarly, the inclusion of elements of AI-generated content in a larger human-authored work does not affect the copyrightability of the larger human-authored work as a whole.139 For example, a film that includes AI-generated special effects or background artwork is copyrightable, even if the AI effects and artwork separately are not.

This isn’t true. You should read the latest guidance by the United States Copyright Office.

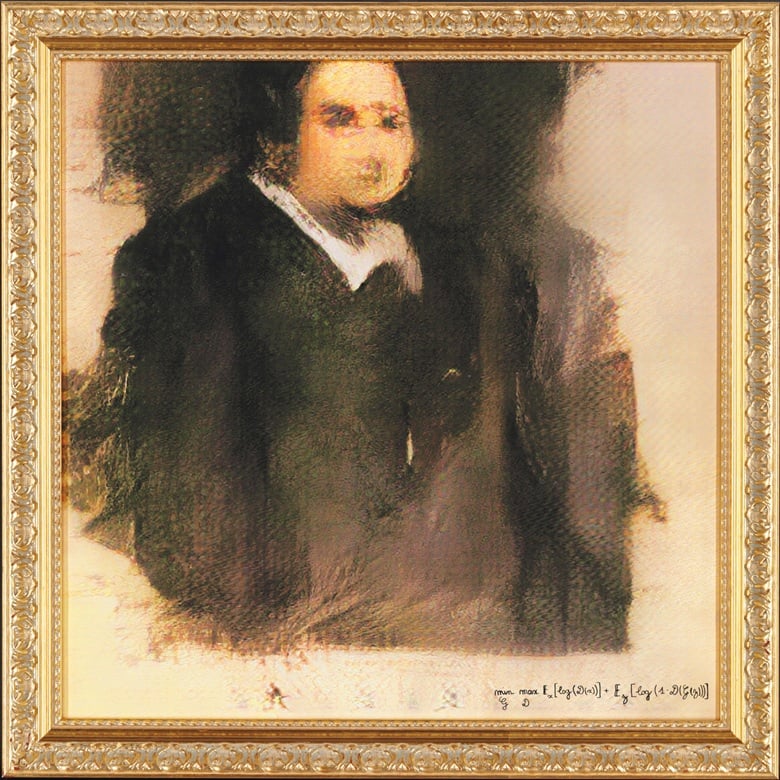

I see it as a time capsule, capturing a moment in time in the medium’s evolution. I mean, check out the first ever AI-generated image that sold for $432,500 USD back in 2018:

Fuck that guy.

Jesus fucking christ. Only four more years. I hope.

Fair use isn’t a loophole, it is copyright law.

I’m open to feedback.